Autonomous vehicles collect environmental data from radar, lidar (light detection and ranging) sensors, cameras, and neural networks utilizing machine learning algorithms. Neural networks and machine learning algorithms further process this collected data for analysis. Automated vehicles use algorithms to construct an accurate world model of their surroundings and their location. After establishing this data, an autonomous vehicle (AV) can formulate a strategy to navigate from one location to another.

1. Vision

One of the major obstacles for self-driving cars is perceiving their surroundings. Autonomous vehicles use cameras, LiDAR, and radar sensors to gather visual data about their environment. These sensors typically encase a vehicle for 360-degree coverage, providing a comprehensive view. Cameras capture visual details while reading road signs and traffic signals, while LiDAR uses laser beams to map its surroundings and measure distances.

Radar technology transmits radio waves that bounce off objects to detect them in fog or rain, providing a quick way of finding objects quickly and accurately. When combined with computer vision algorithms, these sensor systems help interpret data to provide context to surrounding environments.

2. Sensors

Sensors enable autonomous vehicles (AVs) to sense their environment. These AVs are part of an array of sensing layers that includes cameras of various resolutions and sizes, radar, and lidar (which uses laser light pulses for distance sensing), enabling them to take a comprehensive picture of their surroundings.

Radar is an affordable technology that works reliably even under adverse weather conditions, while cameras with color vision can identify traffic lights, signs, and vehicles. When combined with lidar—which measures shape and depth around cars and road geography—these sensors help facilitate safe navigation by providing data to aid navigation with algorithms at work in each sensing layer.

3. Algorithms

Autonomous vehicles must interpret multiple inputs, such as visual images from cameras or radar signals bouncing off nearby objects, to plot collision-free navigational paths. This data feeds into algorithms that plot collision-free navigation paths.

Automated vehicles utilize neural networks trained on massive quantities of naturalistic driving data to predict the likely actions of other road users, like pedestrians crossing streets. This predictive capability enables autonomous cars to avoid close calls and collisions proactively. AI-enhanced motion planning and traffic coordination at a city level hold enormous promise to alleviate congestion and emissions by optimizing driving behaviors and logistics, further increasing passenger productivity without needing driver attention.

4. Planning

The next step in developing autonomous vehicles is creating a detailed map of an environment, using inputs from sensors like lidar and cameras. This allows vehicles to recognize and categorize various objects on the road, including static obstacles as well as changes like lanes changing.

Software in vehicles then uses this information, coupled with their experience and knowledge of road rules, to predict what other drivers and pedestrians will do within milliseconds—an impressive feat considering driving conditions in Cairo or Bangkok are vastly different from California highways.

5. Communication

Autonomous vehicles must be able to communicate effectively with their environment—which includes infrastructure, other cars, and pedestrians—to safely navigate their terrain. Automakers such as Google’s Waymo have long been developing autonomous vehicle technologies, and currently these systems are being tested extensively across a fleet of Chrysler Pacifica hybrid minivans.

Autonomous cars require sensors and cameras in order to be aware of their surroundings, which capture data that is processed and interpreted by artificial intelligence algorithms in order to make decisions similar to human drivers. This allows autonomous cars to save lives by eliminating human error—the leading cause of car accidents—as well as helping reduce traffic congestion.

6. Control

Autonomous cars are designed to save lives by eliminating human error as the cause of car accidents. In order to do so effectively, they need to respond instantly to changing conditions.

Sensors placed around their vehicle create and maintain a map of their environment for autonomous cars to use. Radar, cameras, lidar (light detection and ranging system that bounces pulses of laser light off objects to measure distances), and ultrasonic sensors are used to recognize traffic lights, read road signs, and spot pedestrians. Neural networks utilize this data to recognize objects. For instance, this software recognizes bikes as distinct from cars.

7. Decision-making

As autonomous cars mature, their software will be capable of detecting everything around them, such as road markings, other vehicles, and pedestrians. Based on that information, autonomous car software will decide whether or not to accelerate, swerve, or continue normal driving.

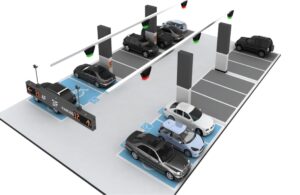

But their decision-making processes aren’t perfect: there have been reports of self-driving cars hesitating or veering unnecessarily. Autonomous vehicle manufacturers are taking steps to address this problem by using sensors that read road signs and information from vehicles ahead, like traffic lights. Furthermore, autonomous vehicles also communicate with each other and infrastructure components such as smart parking systems to avoid collisions and reduce congestion.

8. Routing

Sensors, lidar, and cameras help autonomous cars quickly build a virtual map of their environment in fractions of a second, which allows them to determine whether it’s safe to merge into traffic or pass pedestrians.

Autonomous vehicles must adapt to changing road conditions, from construction projects and weather events to natural disasters and emergencies. To manage such events safely, autonomous cars use sensors that detect items like debris in the road or people moving quickly—something their sensors cannot do themselves. Autonomous vehicle navigation includes two key functions: local path planning and global routing. Local path planning uses real-time adjustments, while global route tracing identifies the optimal route from any given start to any given destination.

9. Safety

Autonomous vehicles must adhere to road traffic rules, avoid collisions where possible, and treat other road users courteously while remaining within operational boundaries without creating disruptions—these requirements are considered essential safety standards.

To do this effectively, they must develop and update an internal map of their environment by collecting data from multiple sensors. They must also be capable of responding swiftly to unexpected situations—including sudden weather conditions that decrease visibility or cause roads to become hazardous—while being flexible enough to deal with software bugs or sensor errors when they arise.